- Founder, Work of the People and Working, International, a collaborative design and development studio, research art practice, and publishing imprint

- VP, Design, MasterClass

- Founding member, fmr. Chief Creative Officer, Head of Design & NYC Studio at VSCO and PLAZA, a VSCO product and design lab exploring AI and decentralized spaces for ideas and exchange

- Co-Founder, Table, a record of contemporary Asian and Pacific Islander creativity

- Independent study at The New School

- Contextually changing omni-directional navigation mechanism US9977569B

- Recon, or, surveillance architecture

- Ephemera, comments on sedimentation

- some links: cv, are.na, instagram, vsco, substack

- wayne@workofthepeople.co

Project

PLAZA Generative Interfaces

AI as Interface Maker

2024

AI as Interface Maker

2024

Insight (Abstract)

Creative software is a surprisingly stagnant space. Despite the rapid evolution of technology, user experience patterns, workflows and features are resistant to evolution, much less revolution. In part, this is a result of monopolistic control by industry-standard platforms that rely on disincentivizing risk and maintaining a comfort zone for incumbents and an aging user base. Additionally, professional creative work often prefers repeatable standards and patterns due to needs for efficiency and utility. Further complicating the issue arises from legacy patterns that were originally designed in an era of desktop computing that have propagated decades later. This means traditional creative tools are built around rigid interfaces, layer hierarchies, and menu-driven commands—that assume a linear, mechanical approach to creativity: precise, technical, and often disconnected from the intuitive, messy, and fluid ways in which humans actually make art.

In contrast, real-world creativity is often nonlinear. Artists sketch loosely before refining, experiment without knowing the outcome, and make decisions based on emotion, impulse, and improvisation. Film and photo editors, musicians, and so forth may sit in room with their colorists and producers and riff on possible directions. They discuss and sketch. They use their hands, bodies, and senses to shape ideas in real time—scraping, smudging, layering, or even destroying parts of their work to discover something new. Creativity in the physical world is dynamic and iterative, not bound by grids or dropdowns.

The gap between this organic process and the constraints of legacy software is glaring. While the tools demand precision and forethought, the act of creation is often about exploration and play. This mismatch creates friction, especially for emerging creators who think visually and emotionally, not procedurally.

In contrast, real-world creativity is often nonlinear. Artists sketch loosely before refining, experiment without knowing the outcome, and make decisions based on emotion, impulse, and improvisation. Film and photo editors, musicians, and so forth may sit in room with their colorists and producers and riff on possible directions. They discuss and sketch. They use their hands, bodies, and senses to shape ideas in real time—scraping, smudging, layering, or even destroying parts of their work to discover something new. Creativity in the physical world is dynamic and iterative, not bound by grids or dropdowns.

The gap between this organic process and the constraints of legacy software is glaring. While the tools demand precision and forethought, the act of creation is often about exploration and play. This mismatch creates friction, especially for emerging creators who think visually and emotionally, not procedurally.

Provocation

What might new patterns look like that feel more like extensions of the human hand and mind—fluid, adaptive, and rooted in how we actually create — look like?

Concept

The prevailing development of generative AI is centered on getting to as usable, accurate, high-fidelity a final output as possible. But there is criticism of AI dehumanization — from unnatural, albeit at times interesting and new output, to the potential replacement of human workers. If we are to reimagine creative tooling that feels more human, it might seem that AI might be an unwelcome guest. However, it might simply be about repositioning the context in which AI appears. It is clear that AI offers potential and opportunity for new patterns to emerge. Instead of simply focusing on output, what if generative AI became akin to the colorist in the edit studio? What if AI became a conversational partner and improviser? In other words, can AI generate interfaces in addition to final output? Can AI be the maker of tools and complex machinery?

Explorations

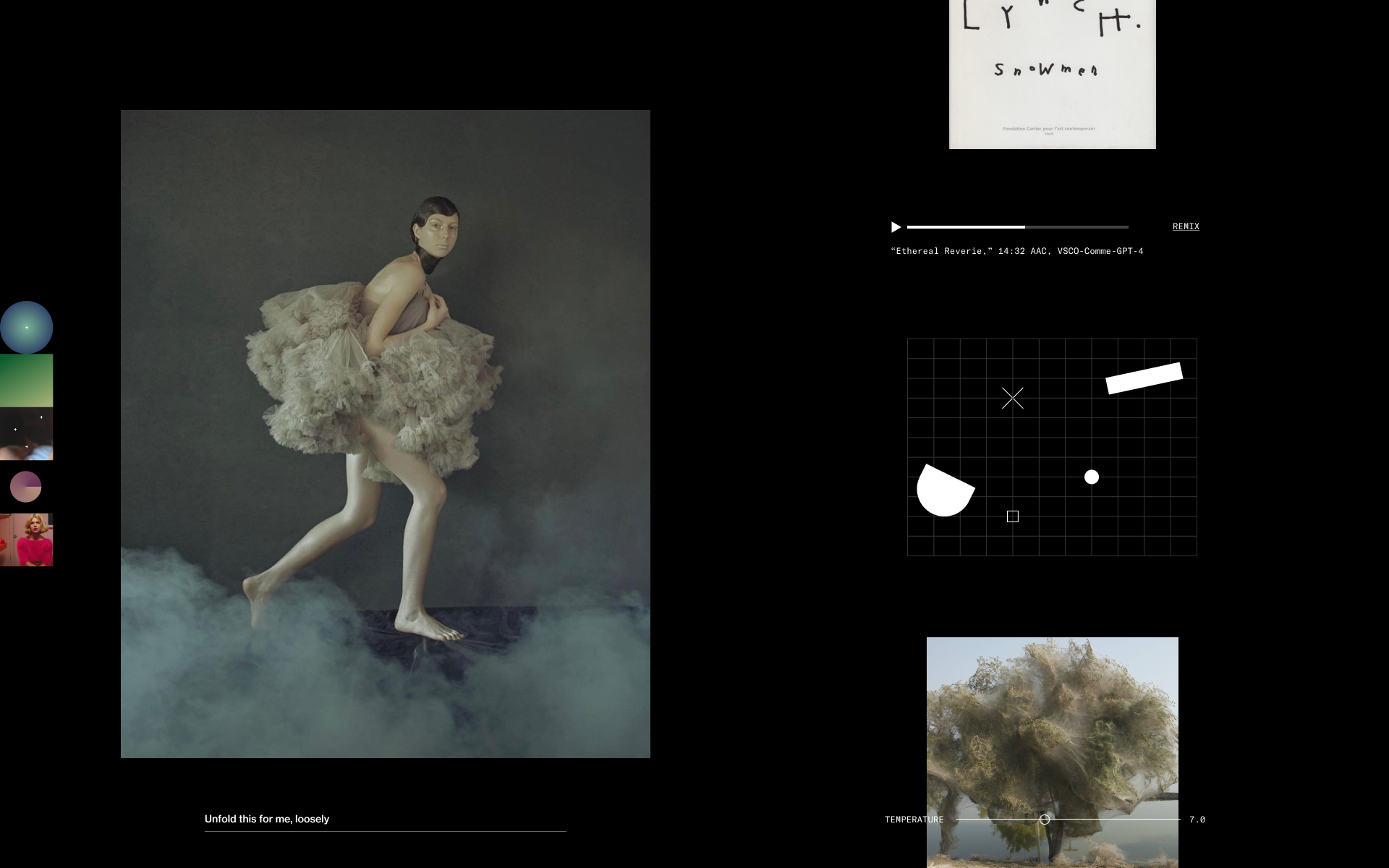

As Editor

Custom tools are automatically generated based on phrases (or prompts). For example, in today’s software there is no specfic tool to “make an image bluer.” To achieve this, it would require using a combination of arbitrary tools like HSL, Curves and Color Balance. Instead, let’s use AI to generate a custom “make it bluer” interface element, custom tailored to the specific ask. In this model, the human creative input is realized in a deeper, richer experience, and the input is no longer limited or constrained within the dimensions of the aforementioned predetermined tools. Perhaps the creator has an affinity for the look of Wim Wender’s films, or the tonality and emotion of a Max Richter score, or might have an image by photographer Gabriel Moses in mind that they want to achieve the same tonality - each prompt generates a specfic interface, or “tool” to achieve the specfic request. Lastly, a series of phrases can be used collectively as a “preset” - a composite color machine.

In the above example, phrases are stacked as nodes. The first phrase is “Can we make it a little bluer,” and the last phrase is “I’m thinking about Wim Wender’s Paris Texas vibes.” Unique tool interfaces are generative based on the phrase. The system is given agency to devise any sort of UI surface to convey the interpretation of the phrase. Here, a spherical diagram is provided if the user decides to fine tune. The generated tools and phrase stack can be “baked” into a look (preset). Here, the “look” is composed of five unique phrases that have generated five unique tools.

As Research Assistant (or, “Unfolding”)

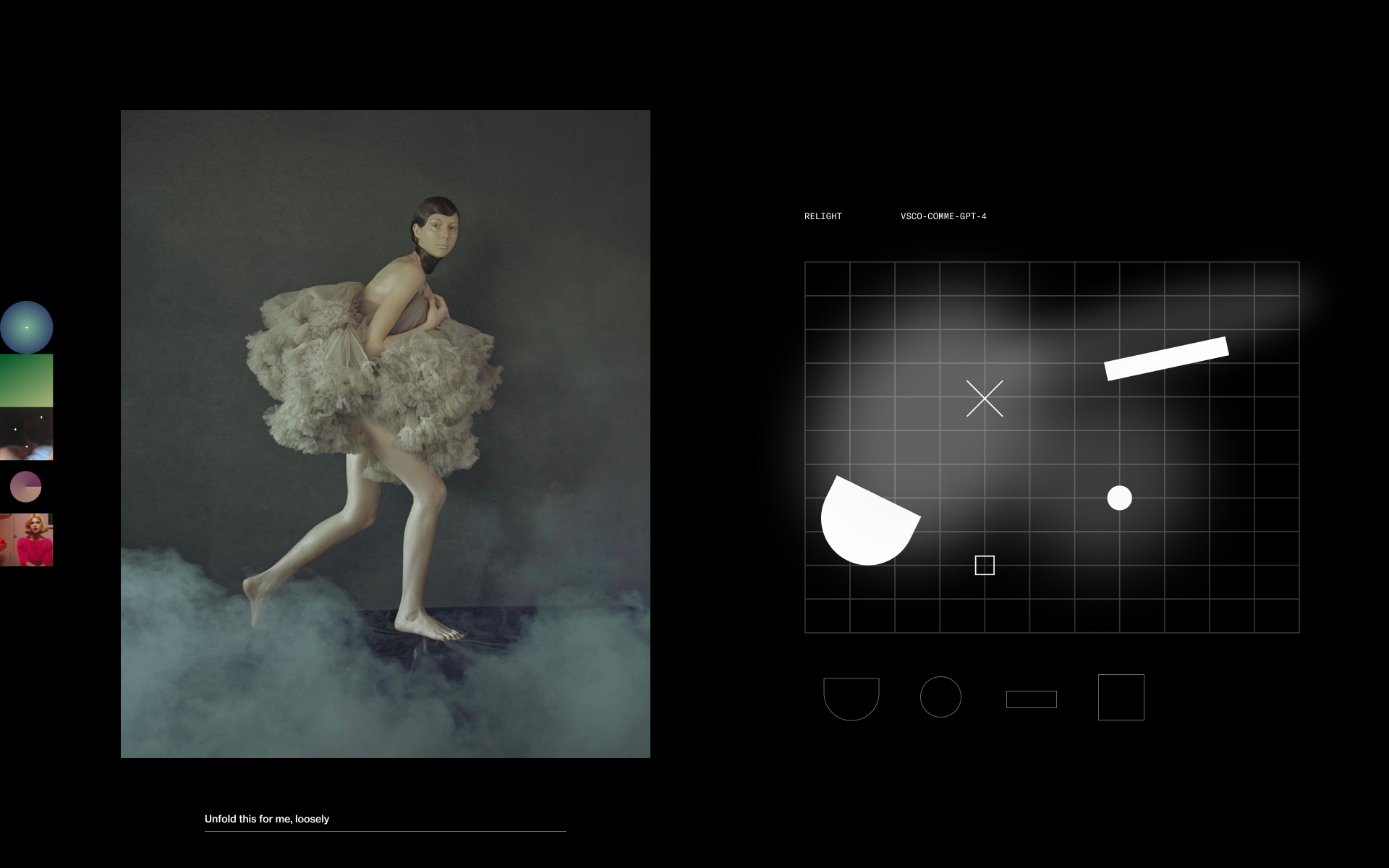

As with any creative work with a partner, subjective feedback and insights are part and parcel of any workflow. We can ask for an edit to be unfolded - offering a variety of insights, comments, and even new interfaces based on the edit thus far. For example, unfolding an image might surface a song that the image reminds the AI of, or suggest a new tool that allows for “relighting” the image based on positions of studio lights:

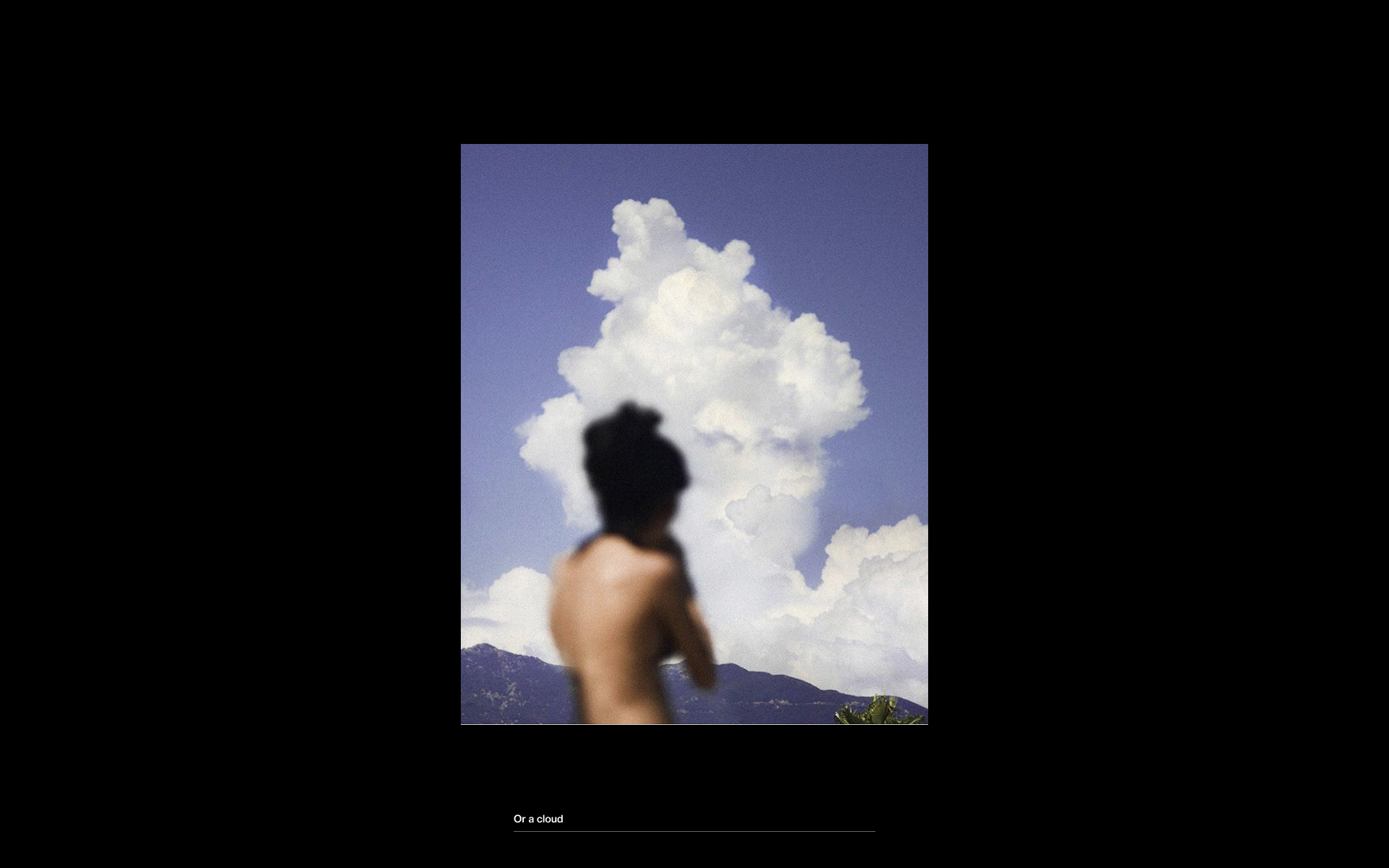

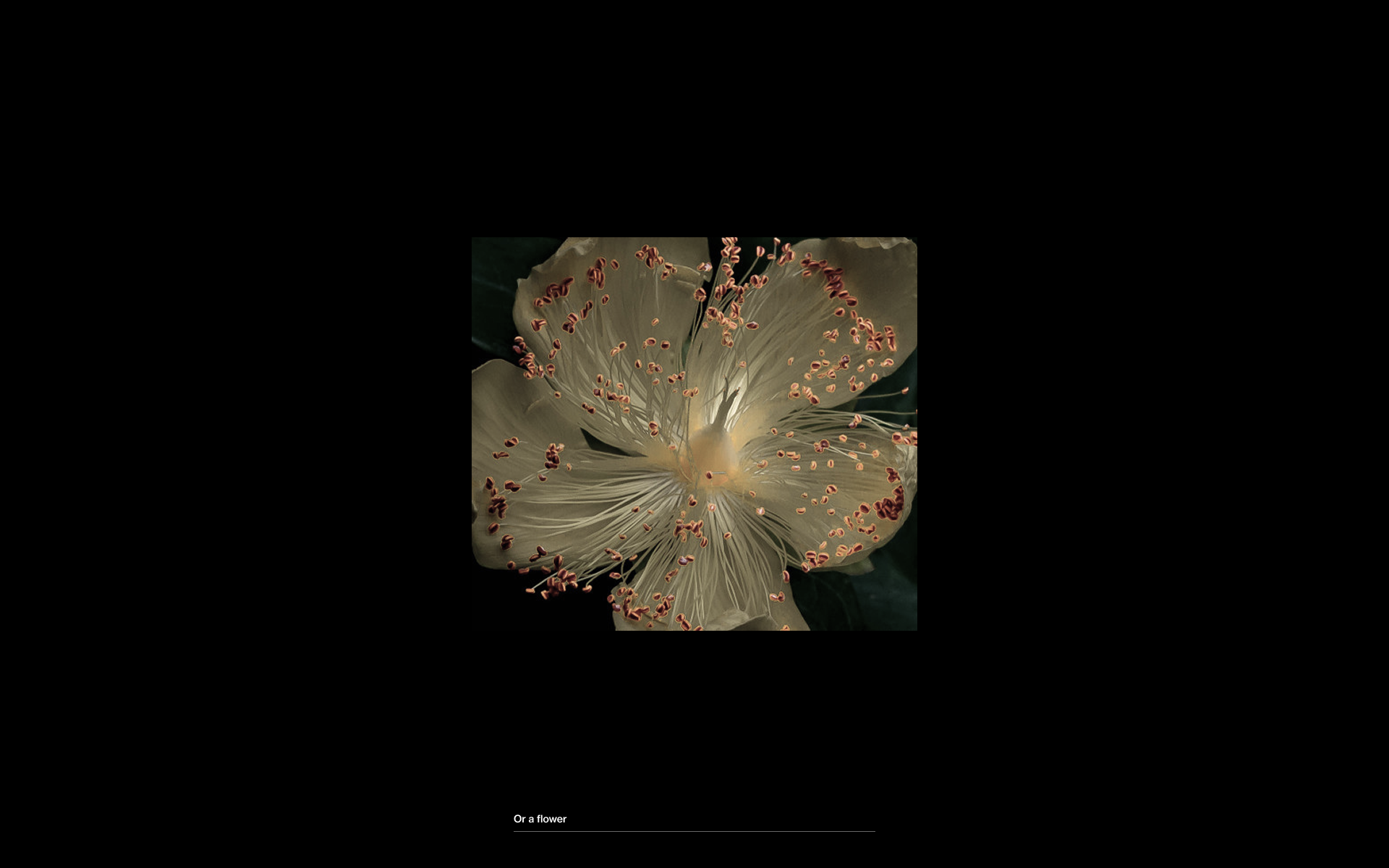

As Improvisor

A more expected use-case would be to have new iterative generations based on a current image, taking an existing media and placing it into new contexts. Perhaps it is to convert an image into an animation, or to abstract it into another objects (a dress into a cloud or flower, for example), or to see it in complex composites, like a magazine layout or in the context of an e-comm experience.

As Perpetual Conversationalist

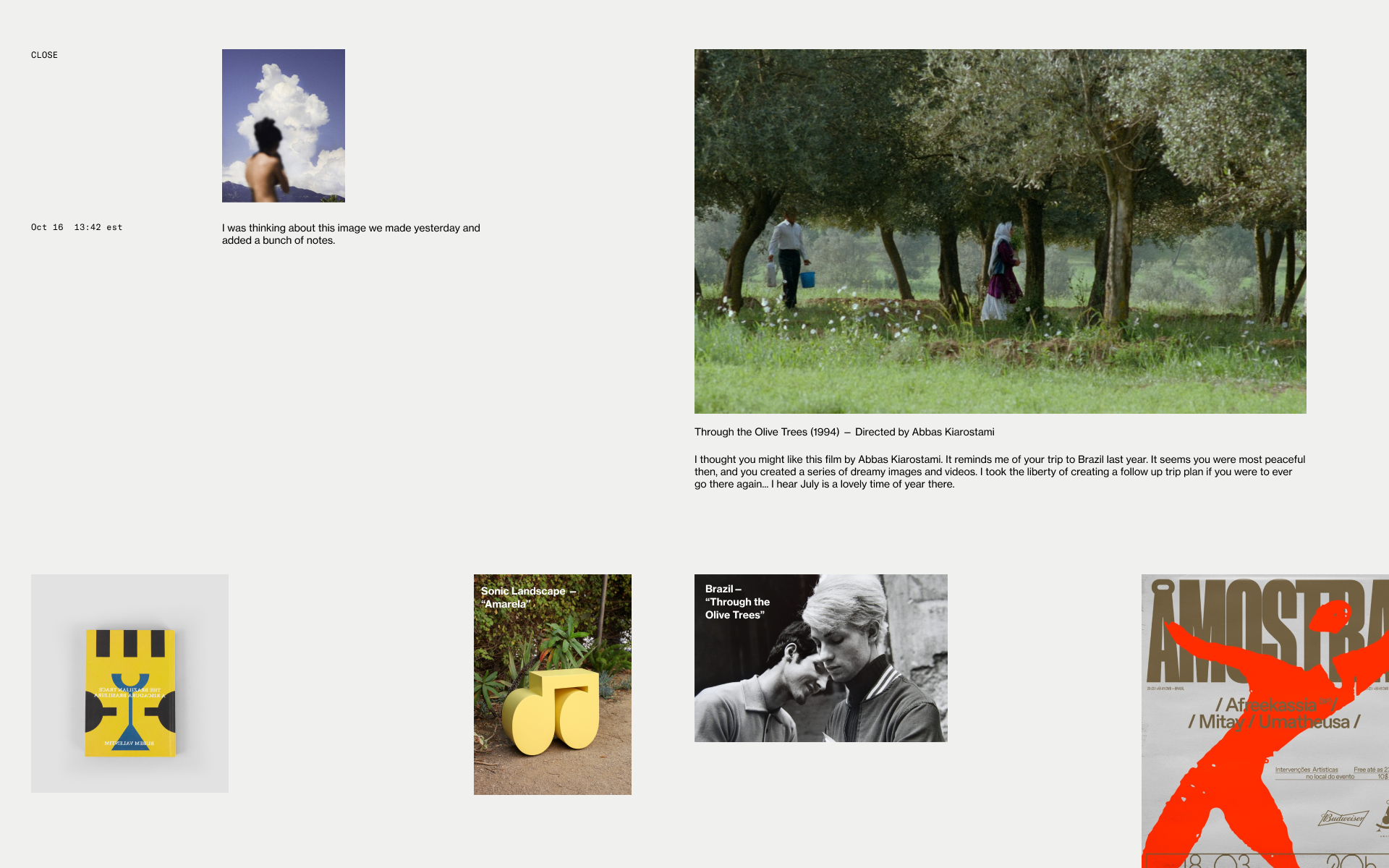

Film directors often return to the same team of creators for obvious reasons. Cinematographers, colorists, composers and so on have a mutual understanding and relationship built up over years of partnering together. Likewise, there is opportunity to allow for perpetual conversation both for AI personalization and sources of inspiration:

Everybody Gets an AI

New models can be spun off and packaged based on the collective history of the person or group: